Workshops

The first day of the conference, Tuesday 16 December 2025, comprises a range of workshops. Delegates will find these events to be especially valuable where there is a current need to consider the introduction of new AI technologies into their own organisations.

There will be four half-day workshops. Delegates are free to choose any combination of sessions to attend. The programme of workshops is shown below. Note that the first session starts at 11 a.m. to reduce the need for delegates to stay in Cambridge on the previous night.

There is a lunch break from 12.30-13.15 and there are refreshment breaks from 14.45-15.15 and from 16.45-17.00.

Please register for either the workshops day (16 December) or a suitable combination of days (16-18 December).

Workshops organiser: Prof. Adrian Hopgood, University of Portsmouth, UK

Sessions 1 and 2 - Stream 1 (11.00-12.30 and 13.15-14.45 Peterhouse Lecture Theatre)

Generative AI

Chair: Dr Carla Di Cairano-Gilfedder, BT Labs, UK

Organising Committee: Prof. Lu Liu (Exeter University), Prof. Huiyu Zhou (University of Leicester), Dr Erfu Yang (University of Strathclyde), Dr Simon Hadfield (University of Surrey), and Dr James Haworth (University College London)

Generative AI is rapidly reshaping the landscape of intelligent systems, this workshop covers cutting-edge research and visionary perspectives on the design, deployment, and governance of generative models and real-world applications. It explores how generative models are enabling adaptive control, task planning, and embodied intelligence in dynamic environments. It also addresses GenAI robustness, interpretability, and alignment which are critical pillars for deploying generative systems in high-stakes domains.

Programme:

11:00 Keynote: Generative AI in Robotics and Automation - Dr Erfu Yang, University of Strathclyde, UK

The rapid advancement of generative AI is transforming robotics and automation. The advanced generative AI technologies offer unprecedented capabilities by enabling robots to perform complex tasks through improved learning, perception, adaptation and control. However, integrating generative AI with robotics and automation in complex, uncertain, dynamic, and unstructured environments remains a significant challenge. This talk highlights some applications and recent advancements of generative AI in robotics and automation, addressing key challenges towards reliable and trustworthy real-world deployments in challenging environments, especially around natural multimodal human-robot interactions.

Slides

11:50 Stability in LLM Training: Challenges and Optimizer Solutions - Dr Tianjin Huang, University of Exeter, UK

Training large language models (LLMs) at scale is often hampered by instability, manifesting as gradient spikes, divergence, and sudden loss collapse. Such failures not only increase computational cost but also hinder the adoption of more efficient techniques, including low-bit training. This talk will explore the key challenges behind instability in LLM training, drawing on empirical observations from recent studies. I will then present recent optimizer-based solutions, such as adaptive gradient clipping, moment resetting, and quantization-aware methods, that help mitigate these issues and improve training stability. The presentation will conclude with open questions and future directions for designing stable optimizers that make LLM training more reliable and efficient.

Slides

Summary

12:30 Lunch

13:15 Keynote: Safety and Trustworthiness of GenAI: Is it deceiving us, or are we deceiving ourselves? - Dr Mark Post, University of York, UK

Generative Artificial Intelligence has seemingly taken the world by storm. Thanks to advancements in computing hardware, open learning algorithms, and the availability of vast datasets on the Internet, models with billions to trillions of parameters are now capable of Human-like responses based on far more knowledge than "Humanly" possible. The story of Artificial Intelligence is that of a species seeking safe, trustworthy companionship with our Engineered, mechanistic companions. But are we giving this "Intelligence" too much credit? Can we, or should we, trust an automaton that we have created in our own image as our equal? Or have we somehow elevated it to a level that we want to trust more than ourselves? The future of Generative AI will critically depend not only on its own true capabilities, but also our perception of them.

Slides

13:55 The Need for Gen AI in Effective Human-Robot Interactions - Dr Maria Elena Giannaccini, University of Nottingham, UK

The widespread integration of robots into everyday life continues to be limited by challenges related to public acceptability and trust. Recent breakthroughs in Generative AI demonstrate significant potential to address these barriers. This talk will explore the diverse opportunities for leveraging Generative AI to advance human–robot interaction and will highlight avenues for collaboration between the Generative AI and robotics research communities.

Slides

14:20 When AI Thinks Too Much: Uncovering Overthinking in Generative Models - Dr Jay Jin, University of Exeter, UK

Generative AI models such as large language and vision-language models have achieved remarkable success, but at a steep computational cost. This talk introduces a new class of adversarial threats called overthinking attacks, which exploit model inefficiencies rather than output correctness. These attacks embed innocuous prompts that trigger excessive internal reasoning, dramatically increasing inference time, GPU usage, and energy consumption while producing identical outputs. We will explore how overthinking arises, and present methods to detect and mitigate it using adaptive guardrails that monitor reasoning depth and resource patterns in real time. By exposing this overlooked vulnerability, the talk aims to reshape how we measure and secure AI robustness—not just in terms of correctness, but also efficiency, sustainability, and trustworthiness.

Slides

Summary

14:45 End

Sessions 1 and 2 - Stream 2 (11.00-12.30 and 13.15-14.45 Upper Hall)

Ethical and Legal Aspects of AI

Chairs:

Assoc. Prof. Dr Carlisle George

Prof. Dr Juan Carlos Augusto, Middlesex University London, UK

Artificial Intelligence (AI) is a field in continuous development and more recently has become more prominent in the news. The pros and cons of its use in various contexts and the possible impact on society is now more openly discussed. There are significant components of AI that cause concern to experts and to the public in general. This is especially true in regard to the use of unregulated AI in safety-critical applications. There are also potential problems emerging from AI being used in many other systems without sufficient transparency regarding how and to what end it is used. These concerns and problems call for the need to properly regulate the development and use of AI in order to ensure its safety and conformity with human-centric values. This workshop brings together colleagues interested in, or actively trying to develop, solutions to protect society from the negative uses of AI, especially in the context of exploring legal and ethical aspects (including regulatory compliance, trustworthy AI, responsible AI, and AI governance).

The Workshop will combine discussion and debate opportunities with presentations of technical work through papers. One objective is to agree on community initiatives which may help with the regulation of AI developments at a national or international level.

Further details and call for papers.

Contact Details:

Assoc. Prof. Dr Carlisle George, C.George@mdx.ac.uk, Research Group on Aspects of Law & Ethics Related to Technology, Middlesex University London, UK

Prof. Dr Juan Carlos Augusto, J.Augusto@mdx.ac.uk, Research Group on Development of intelligent Environments, Middlesex University London, UK

Sessions 3 and 4 - Stream 1 (15.15-16.45 and 17.00-18.30 Peterhouse Lecture Theatre)

Promoting Safer AI for Health and Care

Chairs:

Prof. Jeremy Wyatt, University of Southampton, UK (Chair, BCS Health & Care AI Group)

Prof. Philip Scott, University of Wales Trinity Saint David, UK (Chair, Mobilising Computable Biomedical Knowledge UK Chapter)

In keeping with all service industries, health and care are very data- and decision-intensive, but more safety-critical than most other sectors. In this workshop we will explore how to promote safer innovation in health and care AI. We look forward to highly interactive multi-disciplinary discussions, stimulated by some excellent speakers.

Programme:

15:15 Welcome and aims of the workshop - Prof. Jeremy Wyatt, University of Southampton, UK

15:20 What restrictions need to be placed on AI applications in health and care to promote safety? - Marinos Ioannides, Head of Software & AI, Medicines and Healthcare products Regulatory Agency (MHRA), UK

15:40 Is validation enough, or should we ask for more evidence before widely disseminating health and care algorithms? - Prof. Jeremy Wyatt, University of Southampton, UK

16:00 From enthusiasm to evidence: ensuring safe implementation of AI scribes - Prof. Niels Peek, Chair of Data Science and Healthcare Improvement, THIS Institute, University of Cambridge, UK

16:20 Discussion

16:45 Tea break

17:00 Feedback on discussion

17:20 How can health and care systems mobilise the huge number of facts that practitioners need at the point of decision making safely and efficiently – are computable guidelines the answer? - Prof. Philip Scott, University of Wales Trinity Saint David, UK

17:40 Qualifying large language models for oversight in patient safety governance - Prof. Mark Sujan, Chair in Safety Science, University of York, UK

18:00 Discussion

18:30 End

Sessions 3 and 4 - Stream 2 (15.15-16.45 and 17.00-18.30 Upper Hall)

AI in Business and Finance

Chairs:

Prof. Carl Adams, Cosmopolitan University Abuja, Nigeria / Mobi Publishing, Chichester, UK

Helena Wardle, Founder & CEO of Money Means, UK and Tom Richards, Co-founder & CTO of Money Means, UK

Programme:

15.15-16.45: AI for project management

This first session will explore the range of AI tools and applications within project management. It will draw upon case studies from schools and from business and government organisations. It hopes to raise discussion on the wider issues of AI in areas that impact human activity, such as within project management.

It will further include discussion of mixing AI and human agents within project activity, and the implications of an AI task or project manager, as too what extra elements we need to include in AI systems.

The session encourages discussion and debate around possible future scenarios.

Session team: Prof. Carl Adams (Mobi Publishing/Cosmopolitan University); Dr Saurav Sthapit (Coventry University); Damilola Kehinde (Cosmopolitan University); Dr Hari Pandey (Bournemouth University); Profe. Rabiu Ibrahim (Cosmopolitan University)

- Session Overview (Prof. Carl Adams, Dr Hari Pandey, Dr Saurav Sthapit)

- AI in Project Management - an overview of PM Tasks (Damilola Kehinde)

- Insights from workshops/focus groups on Application of AI in Law; The Next big Thing and Education NAPPS. Involving users to identify their main activities (Prof. Rabiu Ibrahim, Prof. Carl Adams)

- Checking, Validating and Testing PM Task (Prof. Adams and Prof. Rabiu)

- Applying GenAI in PM tasks (All)

- Mapping PM tasks with GenAI

- Checking, validating and testing GenAI outputs

- Impact assessments: EU AI Law Ready

- Session Review (Dr Saurav Sthapit , Dr Hari Pandey, +All)

16:45-17:00: Tea break

17:00-18:30: AI for financial management and advice

This second session will include presentations by AI engineers from Money Means. They will present the challenges and learnings from building Aida - a digital financial adviser. The session will demonstrate the real-world challenges of integrating AI components (LLM, Vector Stores, MCP, Agentic and ACP) with deterministic and stochastic components, to create an immersive digital product.

Sessions 3 and 4 - Stream 3 (15.15-16.45 and 17.00-18.30 Davidson Room)

Applied XAI in the field

exemplified with an aquatic weed-harvesting use case

Chair: Dr Christoph Manss, DFKI (Deutsches Forschungszentrum für Künstliche Intelligenz; German Research Centre for AI), Germany

Co-presenter: Dr. Tarek El-Mihoub, Jade University of Applied Sciences, Germany

In the project HAI-x (Hybrid AI explainer), we work on interactive explanations of a system of AIs. As a use case, we consider a route optimisation for a weed-harvesting scenario in the Lake Maschsee in Hanover, Germany. Along with this use case, we present different AI and ML algorithms together with ways to generate their explanations, how they can be passed to subsequent processes, algorithms, and users, as well as how to present the algorithmic results to the end user. Please see the project webpage for further information.

Programme:

15.15-16.45:

- Workshop and use case overview

- Explain the detection of weeding areas with remote sensing

- AI for explanation of detected objects in SONAR data

- Prototype and counterfactual explanations

- Attention as a mean of explanation for object detection in SONAR data

- Saliency maps for object detection in SONAR data

- Discussion

16:45-17:00: Tea break

17:00-18:30:

- Data fusion and how to provide levels of explainability (layers of explainability)

- Development of a user interface to present the explanations for this routing use case

- Counterfactual explanations for path planning

- Discussion

|

|

|

|

|

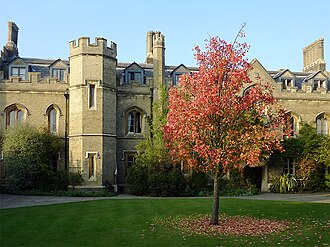

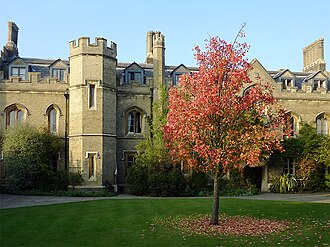

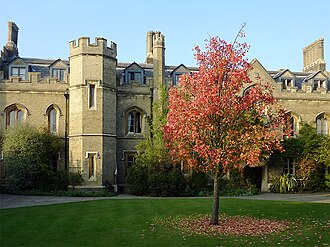

Peterhouse College, Cambridge, the venue for AI-2025

|

|